Machine Learning: Apache Spark comes with out of the box support for machine learning called MLib which can be used for complex, predictive data analytics.

#Install apache spark over hadoop cluster code

Reusability: Spark code can be used for batch-processing, joining streaming data against historical data as well as run ad-hoc queries on streaming state. Multiple Language Support: Spark provides multiple programming language support and you can use it interactively from the Scala, Python, R, and SQL shells. the output RDD/datasets are not available right away after transformation but will be available only when an action is performed.ĭynamic nature: Spark offers over 80 high-level operators that make it easy to build parallel apps. Lazy Evaluation: All the processing(transformations) on Spark RDD/Datasets are lazily evaluated, i.e. Spark achieves this using DAG, query optimizer and highly optimized physical execution engine.įault Tolerance: Apache Spark achieves fault tolerance using spark abstraction layer called RDD (Resilient Distributed Datasets), which are designed to handle worker node failure. Spark achieves this by minimising disk read/write operations for intermediate results and storing in memory and perform disk operations only when essential. Speed: Spark enables applications running on Hadoop to run up to 100x faster in memory and up to 10x faster on disk.

But, in the majority of the cases, Hadoop is the best fit as Spark’s data storage layer. It is not mandatory to use Hadoop for Spark, it can be used with S3 or Cassandra also. Spark is developed in Scala language and it can run on Hadoop in standalone mode using its own default resource manager as well as in Cluster mode using YARN or Mesos resource manager.

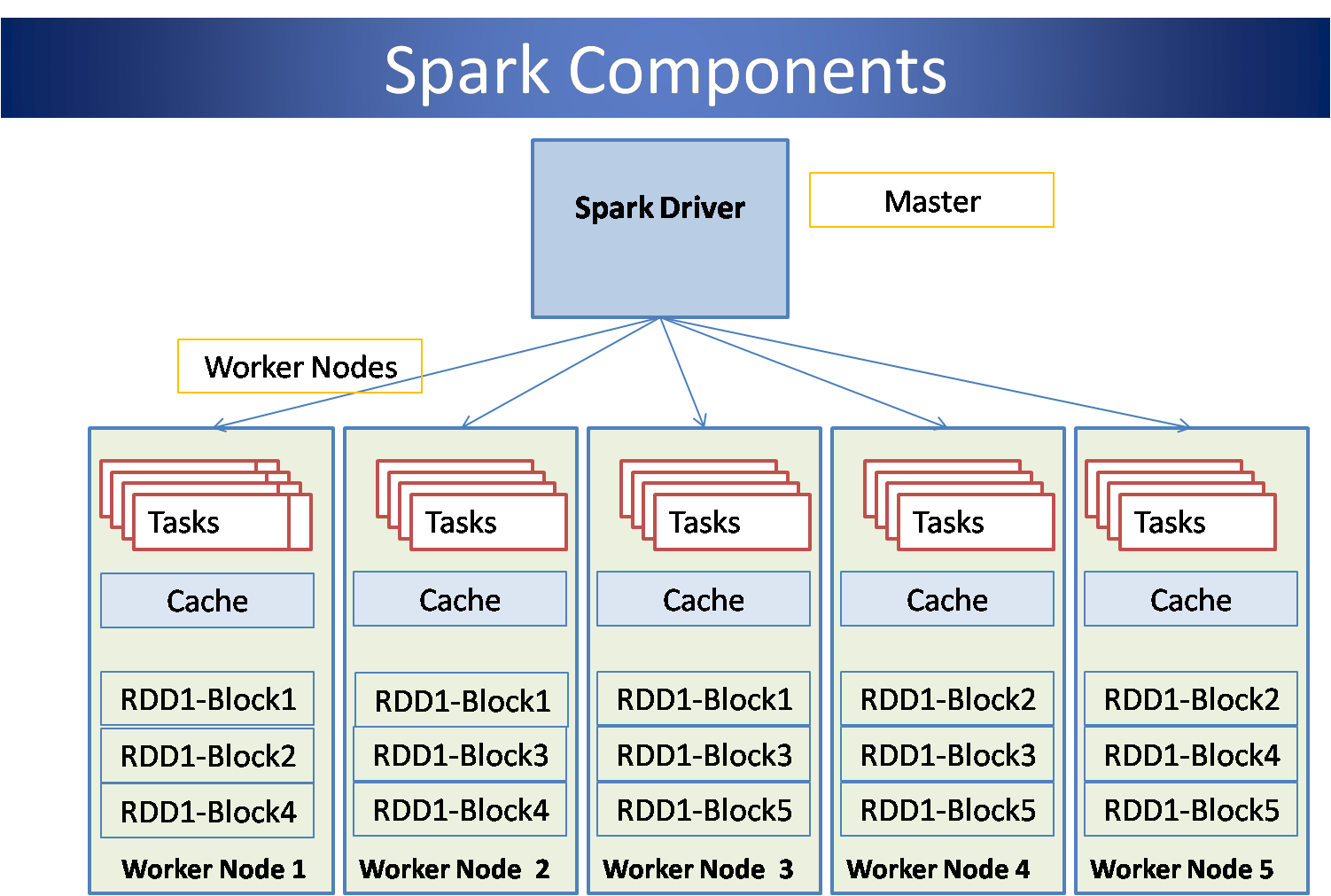

Spark, instead of just “map” and “reduce” functions, defines a large set of operations called transformations and actions for the developers and which are ultimately transformed to map/reduce by the spark execution engine and these operations are arbitrarily combined for highly optimized performance. It also supports a rich set of higher-level tools including Spark SQL for SQL and structured data processing, MLlib for machine learning, GraphX for graph processing, and Spark Streaming. It provides high-level APIs in Java, Scala, Python and R, and an optimized engine that supports general execution graphs. Apache Spark is a fast and general-purpose cluster computing system. What is Spark?Īs per Apache, “Apache Spark is a unified analytics engine for large-scale data processing”. Be it telecommunication, e-commerce, banking, insurance, healthcare, medicine, agriculture, biotechnology, etc.

One solution cannot fit at all the places, so MapReduce will have its own takers depending on the problem to be solved.Īlso, Spark and MapReduce do complement each other on many occasions.īoth these technologies have made inroads in all walks of common man’s life. Both the technologies have their own pros and cons as we will see them below. But, it cannot be said in black and white that MapReduce will be completely replaced by Apache Spark in the coming years. But at last, 5 years or so with Apache Spark gaining more ground, demand for MapReduce as the processing engine has reduced. MapReduce has been there for a little longer after being developed in 2006 and gained industry acceptance during the initial years. The global Spark market revenue is rapidly expanding and may grow up $4.2 billion by 2022, with a cumulative market valued at $9.2 billion (2019 – 2022). According to report forecast, the global Apache Spark market will grow at a CAGR of 67% between 20. The demand for Spark is increasing at a very fast pace. Most of the cutting-edge technology organizations like Netflix, Apple, Facebook, Uber have massive Spark clusters for data processing and analytics. Since its launch Spark has seen rapid adoption and growth. Market Demands for Spark and MapReduceĪpache Spark was originally developed in 2009 at UC Berkeley by the team who later founded Databricks. Here comes the frameworks like Apache Spark and MapReduce to our rescue and help us to get deep insights into this huge amount of structured, unstructured and semi-structured data and make more sense out of it. To store and process even only a fraction of this amount of data, we need Big Data frameworks as the traditional Databases would not be able to store so much of data nor traditional processing systems would be able to process this data quickly.

#Install apache spark over hadoop cluster full

You can read DOMO’s full report, including industry-specific breakdowns. By 2020, it’s estimated that 1.7MB of data will be created every second for every person on earth. Over 2.5 quintillion bytes of data are created every single day, and it’s only going to grow from there.

It is surprising to know how much data is generated every minute. Big data sets are generally huge – measuring tens of terabytes – and sometimes crossing the threshold of petabytes. Big data is primarily defined by the volume of a data set.

0 kommentar(er)

0 kommentar(er)